Unlock Google Search Console Data With Google Colab & GSC API

As a career marketing & SEO professional, I have a lot of different skills. I even taught myself Bootstrap when building one of the sites that I own. However, coding & engineering is just not my thing. As a marketing director I’ve always worked with colleagues who had those skills and could do certain tasks for me but as a freelancer that’s not a luxury that I have.

My story actually goes back to my childhood. My dad was a double-major in math & computer science from UCLA and began his career in the early 70s as a mainframe computer programmer. Think those old-school machines that would take up entire rooms in an office building. There are still a surprising amount of businesses that still rely on this sort of architecture for their business so these sorts of skills have always been in high-demand and pay very well. I remember going into the office with my dad on the weekends sometimes and watching him work at home after-hours, and it looked so boring. He enjoyed the work but it made zero sense to me and I knew early on that I would not follow in my dad’s career-path probably to his chagrin. I always wanted to be an artist whether it be a musician, photographer or art director; so somehow ended up in marketing because was more interesting to me than more scientific business careers like programming and finance. The closest thing to coding that I have done was in the 1980’s when my dad would buy computer gaming magazines where developers would code games for the Commodore 64 and include the code in the back of the magazine. My dad & I would spend hours testing it out. Half the time it was full of errors so if we could troubleshoot we could eventually get it to work.

What I am however is a data-junkie. I could not imagine doing SEO and performance marketing in 2025 if you’re not serious about data. SEO is a complex discipline that involves some technical, creative, website code & analytical skills but in the end it’s all about data.

Google Search Console is invaluable for us SEO’s however Google chooses to only limit us to 1,000 rows of data. I don’t understand why Google has always done this when they do make this data available via API. Unless you have enterprise SEO tools like Jet Octopus (an amazing tool BTW) which have Search Console API integration then you’re limited. Which is why I’ve been having fun with Google AI Studio & Google Colab to create AI generated Python scripts that can extract the GSC API data.

It took me about 2 hours of research, trial & error before I was able to generate the right Python code to extract the Search Console data. Initially, it was extracting daily totals for each query but I found myself limited to 10,000 rows of data which only covered a fraction of the total data I needed over 12 months. So the next step was to have the script aggregate the total data for each query instead of which I did not encounter a limit to the amount of data I could extract. For the web search data I extracted about 94,000 rows of data, and after spot-checking the top queries I got all of the data. Then I had Google Colab create another script to do the same thing for Google Images traffic. I got about 93,000 rows of data there. Pretty crazy that I’m getting 90x the amount of data via this method compared to what Google Search Console will provide!

Give these two scripts below a try in Google Colab and see how much data you can extract. You’ll need to register for a Google API and create a JSON API key first through Google Cloud however. Then add the API email address to your Google Search Console account permissions. Then be sure to upload your API key to Google Colab then update the line of code with the file location. You’ll also need to update the domain URL with your own site.

Python Code To Extract All Google Search Console API Data

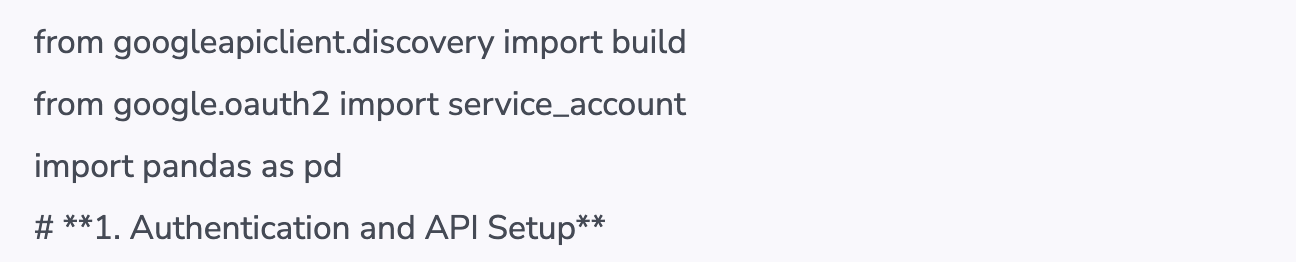

from googleapiclient.discovery import build

from google.oauth2 import service_account

import pandas as pd

# **1. Authentication and API Setup**

# Replace with your credentials and site URL:

SERVICE_ACCOUNT_FILE = ‘API KEY JSON local file’

SCOPES = [‘https://www.googleapis.com/auth/webmasters.readonly’]

creds = service_account.Credentials.from_service_account_file(SERVICE_ACCOUNT_FILE, scopes=SCOPES)

webmasters_service = build(‘webmasters’, ‘v3’, credentials=creds)

property_uri = “https://www.enteryourdomain.com/”

# **2. Data Retrieval with Pagination**

def get_search_analytics_data(property_uri, start_date, end_date, dimensions, row_limit=5000, start_row=0):

response = webmasters_service.searchanalytics().query(

siteUrl=property_uri,

body={

‘startDate’: start_date,

‘endDate’: end_date,

‘dimensions’: dimensions,

‘rowLimit’: row_limit,

‘startRow’: start_row

}

).execute()

return response

start_date = “2024-01-01”

end_date = “2025-01-14” # Adjust as needed

dimensions = [“query”] # Aggregate by query

row_limit = 5000

all_data = []

# Loop to retrieve data in batches of ‘row_limit’ until all data is fetched

start_row = 0

while True:

response = get_search_analytics_data(property_uri, start_date, end_date, dimensions, row_limit, start_row)

if ‘rows’ in response:

all_data.extend(response[‘rows’])

start_row += row_limit

# Check if there are more rows to fetch

if len(response[‘rows’]) < row_limit:

break # Exit the loop if all rows are retrieved

else:

break # Exit the loop if no rows found in the response

# **3. Data Processing and CSV Export**

if all_data:

df = pd.DataFrame(all_data)

keys_df = pd.DataFrame(df[‘keys’].tolist(), columns=dimensions)

df = pd.concat([df, keys_df], axis=1)

df = df.drop(columns=[‘keys’])

df = df.rename(columns={‘clicks’: ‘total_clicks’, ‘impressions’: ‘total_impressions’, ‘ctr’: ‘avg_ctr’, ‘position’: ‘avg_position’})

numeric_cols = [‘total_clicks’, ‘total_impressions’, ‘avg_position’]

df[numeric_cols] = df[numeric_cols].apply(pd.to_numeric, errors=’coerce’)

# Convert CTR to decimal

df[‘avg_ctr’] = pd.to_numeric(df[‘avg_ctr’].astype(str).str.rstrip(‘%’), errors=’coerce’) / 100

# Save aggregated data to CSV

df.to_csv(‘gsc_aggregated_query_data.csv’, index=False)

print(“Aggregated query data saved to gsc_aggregated_query_data.csv”)

else:

print(“No data available for specified parameters”)

Python Code to Extract All Google Search Console API Image Data

from googleapiclient.discovery import build

from google.oauth2 import service_account

import pandas as pd

# **1. Authentication and API Setup**

# Replace with your credentials and site URL:

SERVICE_ACCOUNT_FILE = ‘API KEY JSON local file’

SCOPES = [‘https://www.googleapis.com/auth/webmasters.readonly’]

creds = service_account.Credentials.from_service_account_file(SERVICE_ACCOUNT_FILE, scopes=SCOPES)

webmasters_service = build(‘webmasters’, ‘v3’, credentials=creds)

property_uri = “https://www.enteryourdomain.com/”

# **2. Data Retrieval with Pagination**

def get_search_analytics_data(property_uri, start_date, end_date, dimensions, row_limit=5000, start_row=0, search_type=’web’):

“””Fetches Search Console data using the API.

Includes start_row for pagination to retrieve more data.

search_type: ‘web’ for web search, ‘image’ for image search

“””

response = webmasters_service.searchanalytics().query(

siteUrl=property_uri,

body={

‘startDate’: start_date,

‘endDate’: end_date,

‘dimensions’: dimensions,

‘rowLimit’: row_limit,

‘startRow’: start_row,

‘searchType’: search_type # Specify search type

}

).execute()

return response

start_date = “2024-01-01”

end_date = “2025-01-14” # Adjust as needed

dimensions = [“query”] # Aggregate by query

row_limit = 5000

all_data = []

# Loop to retrieve data in batches of ‘row_limit’ until all data is fetched

start_row = 0

while True: # Keep fetching until no more rows are returned

response = get_search_analytics_data(

property_uri, start_date, end_date, dimensions, row_limit, start_row, search_type=’image’

)

if ‘rows’ in response:

all_data.extend(response[‘rows’])

start_row += row_limit # Update start row for next batch

# Check if there are more rows to fetch

if len(response[‘rows’]) < row_limit:

break # Exit the loop if all rows are retrieved

else:

break # Exit the loop if no rows found in the response

# **3. Data Processing and CSV Export**

if all_data:

df = pd.DataFrame(all_data)

keys_df = pd.DataFrame(df[‘keys’].tolist(), columns=dimensions)

df = pd.concat([df, keys_df], axis=1)

df = df.drop(columns=[‘keys’])

df = df.rename(columns={‘clicks’: ‘total_clicks’, ‘impressions’: ‘total_impressions’, ‘ctr’: ‘avg_ctr’, ‘position’: ‘avg_position’})

numeric_cols = [‘total_clicks’, ‘total_impressions’, ‘avg_position’]

df[numeric_cols] = df[numeric_cols].apply(pd.to_numeric, errors=’coerce’)

# Convert CTR to decimal

df[‘avg_ctr’] = pd.to_numeric(df[‘avg_ctr’].astype(str).str.rstrip(‘%’), errors=’coerce’) / 100

# Save aggregated data to CSV

df.to_csv(‘gsc_aggregated_image_query_data.csv’, index=False)

print(“Aggregated image query data saved to gsc_aggregated_image_query_data.csv”)

else:

print(“No data available for specified parameters”)

These are just two ways SEOs can leverage Python to analyze data. I think as an industry we have only just begun to scratch the surface of what’s possible. Rather than fear AI, I’m excited to have these AI tools here to help with productivity.