Most SEO’s use some combination of Google Search Console (GSC), SEMRush and AHREFs. These are all wonderful tools that do different things. Google Search Console is obviously where most of our 1st party search data comes from aside from the likes of Google Analytics 4 (GA4). SEMRush excels at competitor analysis and keyword research data. AHREF’s is great for monitoring links and auditing tools. These tools by nature appeal to a broad range of marketers and business people. The limitation here is that search engines have long evolved past the point of just keyword tracking. Google Search Console for example samples the data and maybe gives you 40% of the data at best. Search queries were removed from Google Analytics reporting over a decade ago with Universal Analytics. Exact match page optimization largely ended by the late 2010’s. SEO rank tracking tools also need to evolve ASAP. The concept of ranking doesn’t really exist in the same way on AI search.

What Can We Optimize Around Now?

If you’re doing SEO effectively you’ll notice that each page is capable of driving an array of search queries. It’s about semantic search now, including entities. While not perfect, this results in a much more natural way of optimizing on-page content than the old days of SEO where you would use a certain keyword density to shoehorn in a specific phrase even if the readability suffered as a result. The challenge here is that these popular tools have a fundamental disconnect between how SEO actually works now vs the old lexical way (exact match keyword-based content) of what these tools are reporting.

How Can We Get Better SEO Data?

There’s so much focus on LinkedIn about AI generated content at scale. I’m not interested in spamming the internet at scale. What I’m interested in analyzing search data in new ways at scale that fit the modern paradigm of SEO. If Google actually wanted to be helpful (obviously never will be a priority for a company of their size) then they could easily build in the type of functionality that I’ll share here.

Let’s imagine that we’re in a meeting with an executive to talk about search performance. What would we show them if we were limited to GSC, SEMRush, GA4, etc…? We would show them individual keyword rankings, maybe tagged keyword rankings, page URL-level analytics performance, sampled search query clicks on individual keywords. None of these things really tell a story at the big picture level. If we wanted to dig deeper then that with aggregation then requires a lot of either manual work or Excel skills.

GSC + Big Query or API + Python

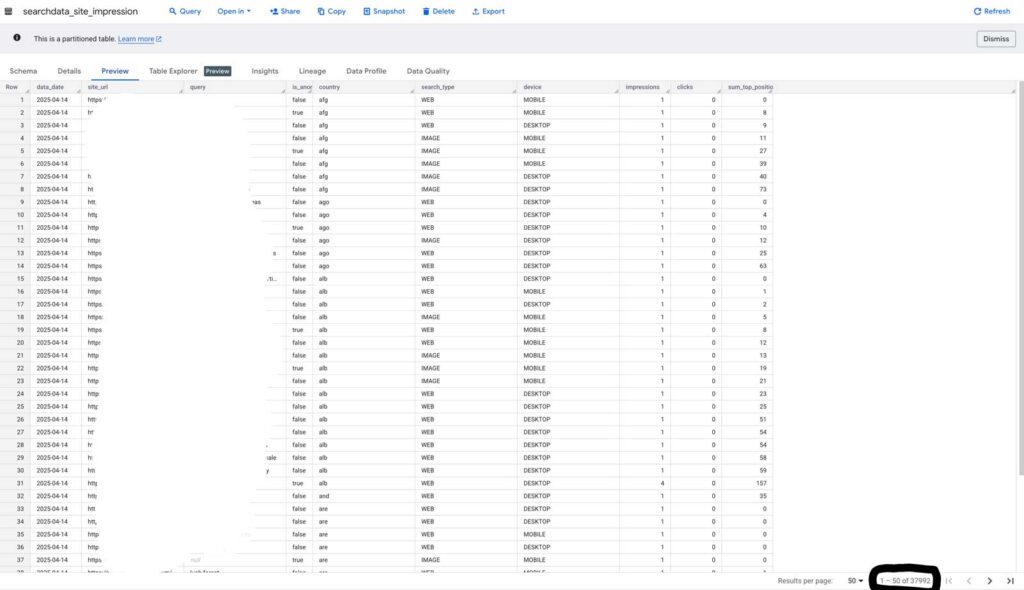

Enter AI tools. To varying degrees, ChatGPT, Gemini, Claude, etc… can analyze data and code. Rather than dwelling on what these tools may replace in the future, let’s focus on how we can leverage these tools to our benefit. Let’s take Google Search Console for example. If I’m in this meeting, what I want to be able to show is how the site is performing based on topics or themes. The sampling issue? We’ll never be able to get all of the data due to “privacy” but we can use Big Query or Search Console API to download all of the available search data which is way better than being limited to 1,000 rows of data. Let’s be honest, the 1,000 row limit is pretty useless for most businesses. Need a way to extract from the API? Use AI to generate a Python script then extract GSC data from Google Colab into a CSV file. Need to analyze further than 16 months worth of Google data? Export your GSC data to Big Query and store it indefinitely. Then have Gemini write a SQL query for Big Query to export your search data to a CSV file or connect to Looker Studio.

Find Search Queries to Optimize

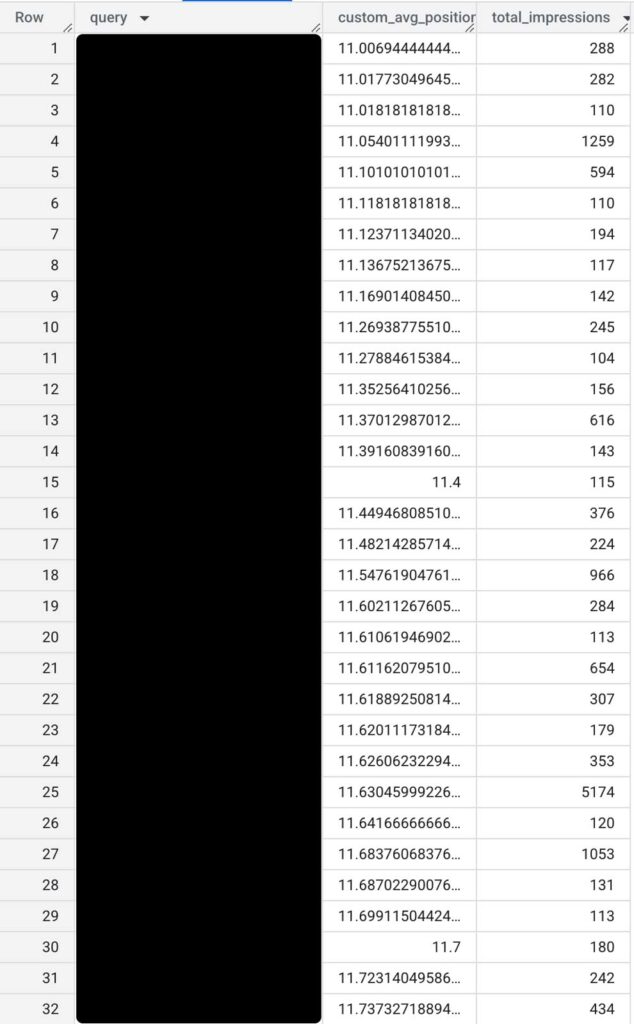

Now that with BigQuery you should have access to more search data (the data table I’m querying from has more than 9 million rows of data), you can run a SQL query to calculate which queries have more than 100 impressions in the past month that have an average position between 11 to 20. Or since Google has less organic results than before you might choose to filter stricter criteria. 3rd party tools like SEMRush are great at estimates but with Search Console you have the actual search volume and rankings.

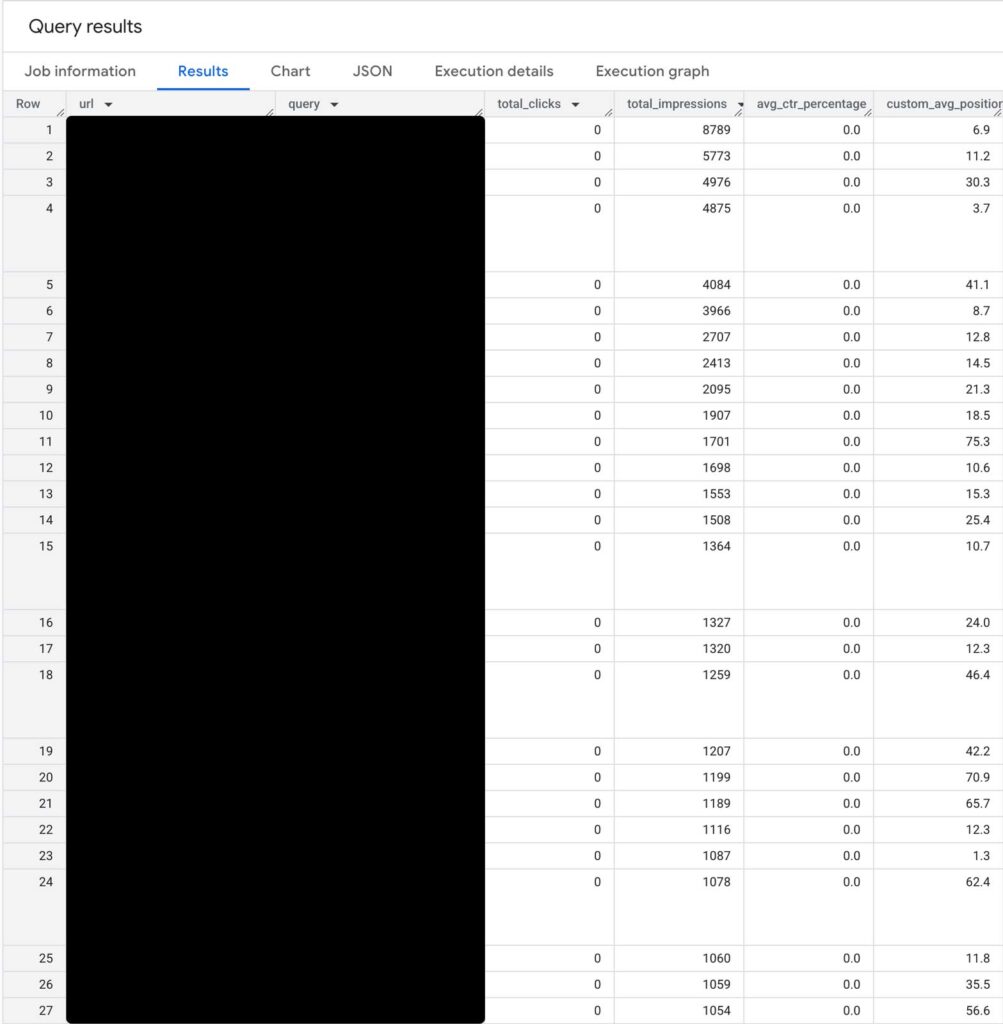

Or perhaps you want to see search queries with high impressions with low CTR. This is easy to query within BigQuery. Trying to calculate this much data on a spreadsheet would take a long time if it didn’t crash your computer by that point.

Join GSC & GA4 Tables

It sure would be nice if Google Analytics 4 would add event data to their Search Console integration but the good news is you can do this with BigQuery and create a dashboard within Looker Studio to share with your management team.

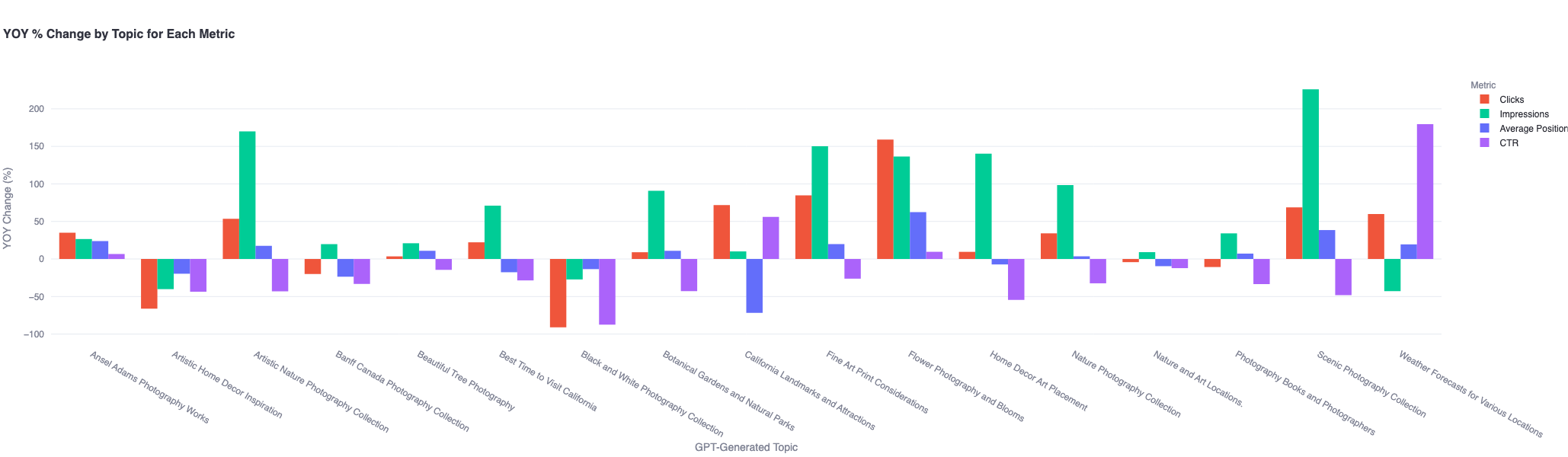

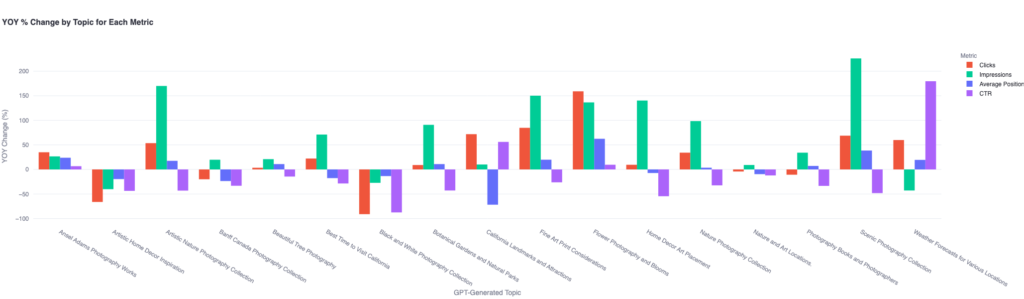

Semantic Search / NLP + Topic Clustering + GSC

Rather than manually grouping together individual search query data or being limited to a small set of individual URLs. Let’s use natural language processing (NLP) to turn the search queries into vector embeddings then group hundreds to thousands of search queries together with a clustering algorithm like K-Means, based on cosine similarity. Then we can use ChatGPT’s API to add topic labeling to these clusters. Then our AI-generated Python script can aggregate the data from these clusters to see growth from clicks, impressions, average position and CTR. Put all the topics into a visual chart that anyone can understand.

Artistic home decor inspiration is struggling this month? Let’s focus on that topic cluster then. We’re seeing growth for flower photography & blooms? Let’s create build a content hub around seasonal flowers and grow that further. By grouping together the queries, this allows us to see how the site is performing holistically by topic rather than focusing on individual keywords. This is way more aligned with semantic SEO than looking at things on an individual keyword level.

As SEO’s we have imperfect tools to work with so let’s do something about that. Only we know what we need so let’s build this functionality for ourselves. A lot of my needs have to do with data visualization since I often present marketing strategy to execs. Google Search Console & GA4 are woefully inadequate so that inspires me to figure out solutions like the ones mentioned in this article.